As a preamble, this article complements the one entitled Image enhancement: Super-Resolution with Deep Learning.

In fact, we've already shown that in various industrial sectors, there's a definite interest in using super-resolution. Here, we're going to focus on the deployment process and performance, rather than on the intrinsic operation of super-resolution.

A functional configuration for real-time and embedded super-resolution

We present below the configuration we consider optimal for deployment on an embedded platform at frequencies greater than or equal to 25 Hz.

Using the NVIDIA TensorRT SDK

TensorRT is an SDK provided by NVIDIA for optimizing inference on GPUs. It is a high-performance inference platform for Deep Learning. It includes an inference process optimization and runtime environment that enables high throughput and low latency for Deep Learning inference applications.

TensorRT can be used in C++ or Python. However, it is necessary to convert an AI model initially in ONNX format into a model in ENGINE format. It is then possible to make a few modifications to the model during conversion, notably in terms of input size, batch-size, precision (FP32 to FP16 see INT8), ...

The model can also be adapted to the desired application (learning, fine-tuning, etc.).

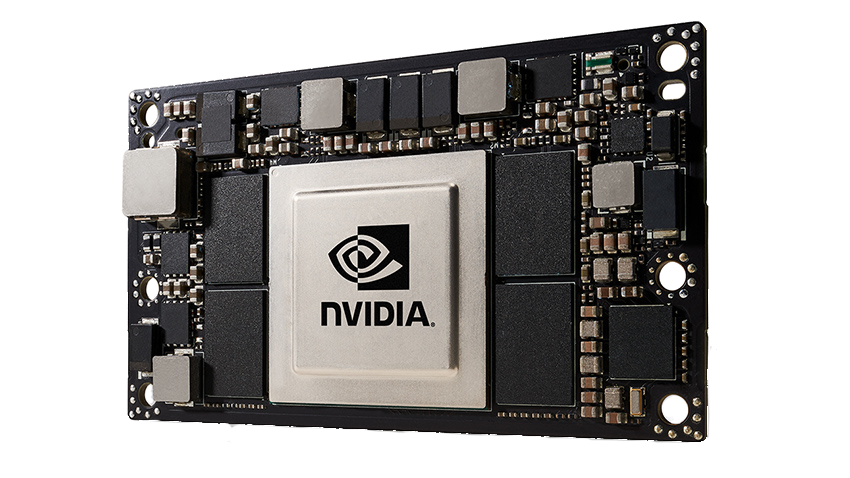

Using an NVIDIA Jetson

NVIDIA's Jetson platforms are obviously ideal for this type of GPU-based AI deployment (edge computing). Indeed, the modules are small in size, have high GPU computing capacities and consume very little power. For more details on these modules, click here.

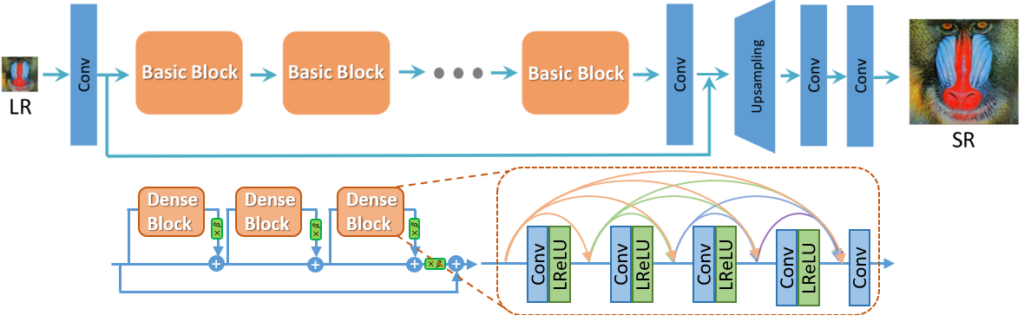

Using the ESRGAN model

ESRGAN (Enhanced Super-Resolution Generative Adversarial Networks) is an artificial neural network that enhances image resolution. Typically, this model is capable of generating a 1080p image from a 720p image.

Other magnification factors are available on request. This makes it easy to improve image resolutions by a factor of 3 or 4.

Without going into the mathematical details, you'll find below the architecture of the ESRGAN model.

For certain projects, we may be asked to deploy super-resolution on specific cameras in infrared wavelengths (for example). There's a great deal of interest in this, since sensors in this spectral band often have low resolution. Indeed, the price of this kind of sensor is directly proportional to the number of pixels.

Software image enhancement is then used to optimize the camera. This is the case, for example, with highly specific SWIR cameras.

It is then essential to re-train the ESRGAN neural model with images from a camera whose wavelength range will be that of the final use.

Using Docker and Ansible for production deployment

At Imasolia, we also have the expertise to optimize your industrial deployment, in particular with Docker, to enable you to flash your Jetsons on a large scale, or to deploy your applications on different platforms (cross-compiling).

We also offer an Ansible playbook for configuring your application environment.

Conclusion

In this article, we look at a typical hardware and software configuration for improving image resolution in real time on an embedded platform.

Whether it's an image in the visible, infrared or other spectrum, there are many applications for upscaling an image.

At Imasolia, we develop standard or customized super-resolution housings. So if you've got a need, we can help - just ask!